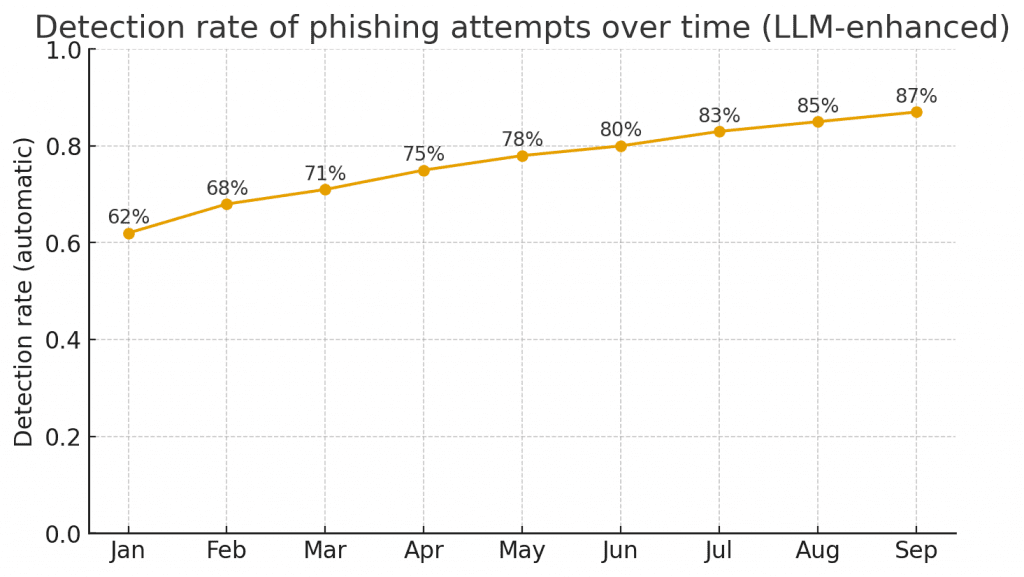

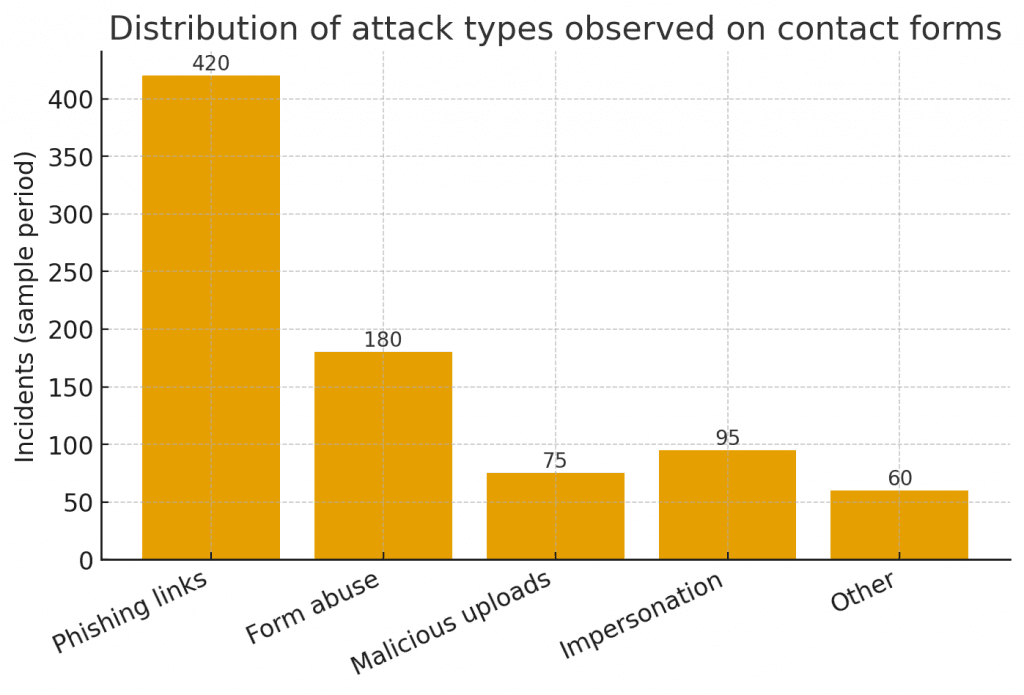

Phishing delivered via web forms and contact pages remains one of the easiest and most effective ways for attackers to social-engineer organizations. Bad actors submit malicious messages, inject harmful links, or use contact forms to initiate fraud and data-exfiltration. Large Language Models (LLMs) are now becoming a powerful addition to the defender’s toolbox — not to replace classic defenses, but to add a context-aware, semantic layer that detects manipulation patterns humans and simple rules often miss.

Below I explain how LLMs fit into the form-security pipeline, show concrete implementation patterns, suggest metrics and thresholds, and share interesting facts teams should know before building a deployment.

Why LLMs complement traditional filters

Classic defenses (regex-based filters, blacklists, basic classifiers) detect obvious badness, but they struggle with subtle, context-sensitive social engineering. LLMs add value because they:

-

Understand semantics, not just tokens — they detect intent and manipulative phrasing.

-

Explain decisions in natural language, producing reasons a moderator can act on.

-

Work with context (multiple fields, metadata, previous interactions), enabling better risk scoring.

-

Adapt quickly via prompt tweaks or lightweight fine-tuning for your site’s language and abuse patterns.

Interesting fact: when combined with small labeled datasets, LLMs can often generalize to novel phrasing of scams far faster than retraining a large traditional ML classifier from scratch — enabling faster response to evolving phishing lures.

Where to insert an LLM in the form flow

A practical deployment usually has multiple stages:

-

Client-side soft-check (optional). A low-cost, prompt-lite inference in the browser or at the edge can provide immediate UX feedback (e.g., “This message looks unusual; are you sure?”) without blocking legitimate users.

-

Server-side classification. The canonical stage: full context (form fields, IP, UA, timestamps) is sent to the model for a risk score and an explanation.

-

Human review gate. High-risk or low-confidence cases are routed to an analyst with the model’s rationale and evidence highlighted.

-

Feedback loop. Moderator decisions (approve/deny) are fed back into the system to refine prompts, thresholds and, if used, lightweight fine-tuning datasets.

Concrete detection patterns

Semantic intent classification

Ask the model to label the submission: legitimate, spam, phishing, impersonation, other. Include a short justification field so reviewers see why it flagged the item.

Link and domain context checks

LLMs can flag inconsistencies between link text and actual URL, spot shortened links, and detect typosquatting (e.g., paypa1.com vs paypal.com) when paired with simple similarity checks on domain names.

Manipulative language detection

Phrases such as “urgent transfer,” “confidential,” “do not tell anyone,” or high-pressure wording are strong signals. LLMs catch these even when attacker uses obfuscation and synonyms.

Cross-field correlation

If a message claims to be from an employee but the email field domain doesn’t match the organization, the LLM can combine that signal with wording and timing for a higher risk score.

Explainable outputs for moderators

Instead of score=0.92, return:

This speeds human triage.

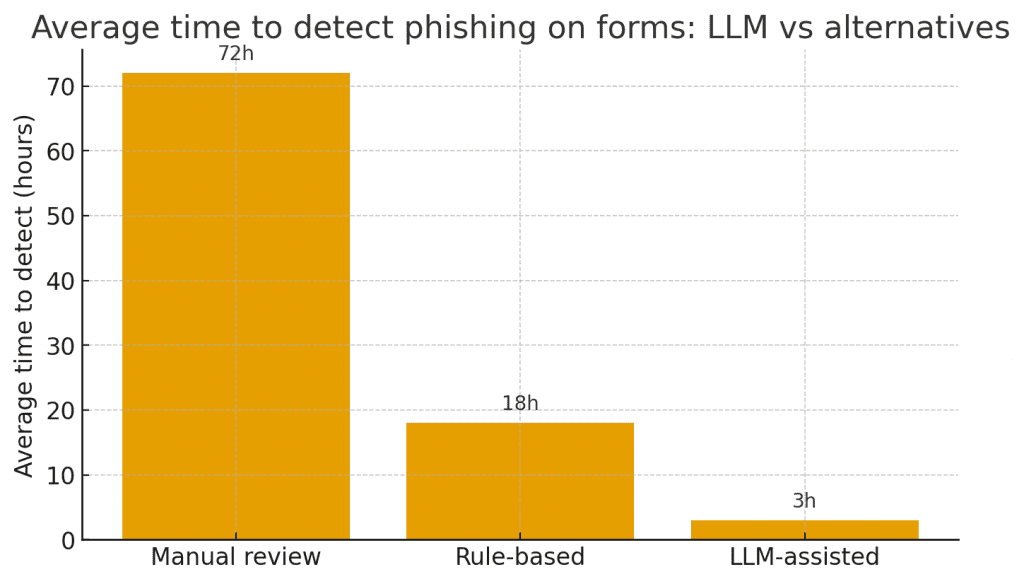

Metrics and operational thresholds

Track and tune a small set of KPIs:

-

Containment rate: % of malicious submissions blocked or held automatically.

-

False positive rate: % legitimate submissions flagged — keep this low to avoid user friction.

-

Mean time to review (MTTR) for items routed to humans.

-

User acceptance / success: how often suggested safe responses or automated replies are edited/overridden.

-

Cost per decision: model tokens + infra. Use it to decide what checks stay server-side vs. edge.

Practical tip: start with a conservative threshold (low false positives), run the model in shadow mode for 2–4 weeks, and then lower thresholds as you gain confidence.

Privacy, safety and governance

-

Minimize PII sent to third-party models. Redact or pseudonymize fields before sending; consider on-prem or private LLM options for sensitive data.

-

Log provenance: always store the exact prompt, model version, and response for audit and rollback.

-

Human-in-the-loop for high impact: never auto-execute actions that change money, access, or legal status without a human review.

-

Adversarial testing: run red-team exercises to probe the model with crafted inputs and measure escape rates.

Interesting fact: Many teams find that adding a tiny verifier prompt — a second, focused LLM call that cross-checks the first output against retrieval evidence (RAG) — drastically lowers hallucination-derived false positives.

Engineering blueprint (lightweight)

-

Collect a seed dataset of ~200–1000 labeled form submissions (legit vs phishing vs spam).

-

Prototype prompts that produce structured JSON (label + reasons + suggested action).

-

Run shadow ops: score real traffic but don’t block; collect metrics for 2–4 weeks.

-

Introduce soft UX signals: warnings or suggestions on the form for low-confidence flags.

-

Deploy conservative auto-hold: only auto-hold extremely high risk, route medium risk to human queue.

-

Automate feedback loop: moderators’ decisions update a labeled store used for prompt tuning and analytics.

Limitations & how to mitigate them

-

LLMs can be gamed. Attackers try to craft messages that avoid detection. Counter with continual adversarial testing and prompt hardening.

-

Cost: high-volume inference can be expensive. Optimize via caching, tiered models (small model for rough triage, larger model for high-risk cases), and batching.

-

Latency: real-time UX checks need fast responses; use edge or small models for on-submit checks and defer heavy checks to the server.

Quick wins you can implement this week

-

Add a server-side LLM scoring endpoint that returns

risk_score+reasonfor each form submission. Run it in shadow mode. -

Implement JSON schema validation and a “hold_for_review” path in your workflow.

-

Create a simple moderator interface showing the submission, model reasons, and action buttons (approve/reject) — track decisions.

Final takeaway

LLMs are not a silver bullet, but they are a fast, effective way to catch nuanced phishing attempts that slip past signature-based defenses. With a layered approach — lightweight client checks, server-side semantic scoring, human gates for high-impact actions, and a feedback loop — teams can significantly reduce phishing risk on web forms while maintaining user experience and privacy. Start in shadow mode, measure carefully, and iterate: the combination of context-aware models plus classic security controls makes web forms far harder for attackers to abuse.