Short answer: Vibe coding — relying heavily on AI assistants to write, patch, or scaffold web application code with little human security oversight — can be a huge productivity booster, but it introduces measurable security risks if you don’t build security into the workflow. When practiced responsibly (security-in-the-loop), it’s useful; when treated as a drop-in replacement for secure development practices, it’s risky.

Below I explain what “vibe coding” means, show hard industry data about the risks, list the problems you can encounter, and give a concrete checklist and technical mitigations so you can use AI safely in web projects.

What is “vibe coding”?

“Vibe coding” is an industry shorthand (coined recently in reporting about AI coding assistants) for the mode where developers lean on AI suggestions heavily: accept generated snippets, scaffolding, and refactors with minimal prompt engineering or security constraints, and often without thorough security review. The phrase captures a casual, high-velocity style of coding powered by AI autocompletions and code-generation tools. Reports and research into AI-generated code have specifically used that term to describe risky, low-governance usage patterns.

Hard facts and the security context (what the data says)

-

AI-generated code often contains security flaws. Veracode’s GenAI code security study found that only ~55% of AI-generated code passed security tests — in other words, 45% of generation attempts introduced known security vulnerabilities (across 100+ LLMs and many languages). Java samples showed especially high failure rates for security issues.

-

Web apps and APIs are under massive attack volume. Industry telemetry shows enormous volumes of web attacks: reports indicate hundreds of billions of web attacks annually (Akamai reported explosive increases and cited ~311 billion web attacks in 2024), and large web WAF providers report tens of billions of web application attacks per month. That means any introduction of a vulnerability into a web app is likely to be discovered and exploited quickly.

-

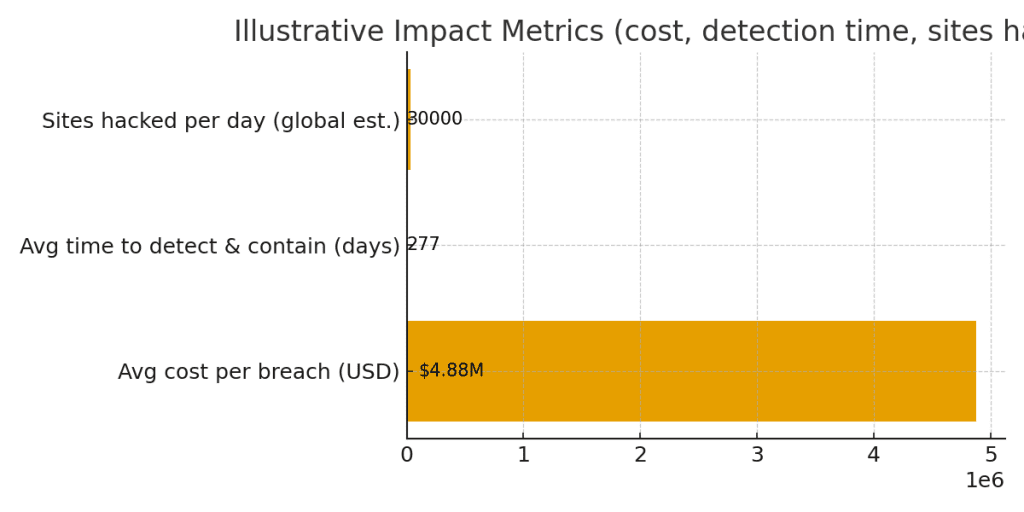

Consequences are expensive. The average cost of a data breach surged in recent IBM analyses — the 2024 IBM Cost of a Data Breach report put the global average around $4.88 million; IBM’s 2025 update continued to highlight the costly business impact and the special risks introduced by ungoverned AI adoption. A single exploitable flaw in a public web app can therefore have outsized operational and financial consequences.

-

OWASP Top 10 vulnerabilities remain central. Many AI-introduced weaknesses map to well-known categories (XSS, injection, broken access control, security misconfiguration). The OWASP Top 10 remains the baseline for what to watch for in web apps.

Why vibe coding increases risk — concrete failure modes

-

Injection & XSS from generated snippets. AI often writes convenient SQL or string-concatenation code without safe parameterization; same with templates and JS that don’t escape user input — classic injection/XSS vectors. (Veracode found high failure rates for XSS-type patterns.)

-

Unsafe defaults & misconfigurations. Generated scaffold often enables permissive CORS, verbose error dumps, permissive file uploads, or leaves debug modes on — all of which are exploited in the wild.

-

Dependency & supply-chain problems. AI may suggest libraries or code patterns that introduce transitive vulnerabilities, outdated packages, or unsafe native bindings.

-

Secrets leakage & data exposure. Prompts that include real secrets (API keys, DB credentials) or code that logs sensitive values risk leakage to logs, telemetry, or if sent to external inference APIs.

-

Authentication & access control errors. AI may produce routes or endpoints without proper authorization checks or role enforcement, producing Broken Access Control issues (an OWASP Top 10 item).

-

Overconfidence / hallucinations. LLMs sometimes “hallucinate” APIs or methods that don’t exist, or produce code that compiles but has subtle logic errors enabling privilege escalation or bypasses.

-

Lack of contextual dataflow analysis. Security often requires understanding data flow across the app (are inputs sanitized, where do they flow?). Current AI completions are weak at global dataflow reasoning; that leaves gaps attackers can exploit. IT Pro

Practical scenarios where problems crop up

-

A junior dev accepts an AI snippet that builds SQL strings using concatenation — production site becomes vulnerable to SQL injection.

-

AI suggests enabling

debug=Trueor writes cleartext logging of tokens; an operator forgets to change settings. -

An AI assistant recommends a 3rd-party package with known RCE CVE — introduced into CI without an SCA (software composition analysis) check.

-

Developers send requests including patient or customer data to third-party inference APIs without a BAA or adequate data minimization — potential regulatory violations.

How to use vibe coding safely: policies, pipeline, and toolchain

Treat AI-generated code like third-party code: assume it is untrusted until validated.

1) Governance & policy (process changes)

-

Policy: Require that any AI-generated code must pass the same gates as external PRs: automated SAST/DAST, dependency scans, and at least one senior reviewer.

-

Prompt policy: Prohibit pasting secrets or PHI into public LLM prompts. Use internal/private models or on-prem inference for sensitive work.

-

Training: Educate dev teams and reviewers on common AI-introduced risks and OWASP Top 10 patterns.

2) CI/CD safety gates (automation)

-

SAST & rules: Run static analysis on every PR (Snyk, Semgrep, SonarQube). Create rules tuned to typical AI mistakes (unsanitized templates, log injection).

-

DAST / fuzzing: Periodically run dynamic scanning and runtime testing against staging.

-

SCA (dependency scanning): Block merges with critical CVEs or flagged transitive packages.

-

Unit + integration tests: Coverage must include security-relevant flows (auth, input sanitization, error paths).

-

Policy as code: Enforce infrastructure and secret management rules in IaC pipelines (e.g., Terrascan, Checkov).

3) Model & prompt engineering controls

-

Security-aware prompting: When using AI for code, include prompts like “produce only parameterized queries; avoid user-submitted string concatenation; include comments explaining input validation.”

-

Private / on-prem models: For sensitive projects prefer private models hosted inside your security perimeter — reduce data exfil risk and comply with BAAs/regulations.

-

Audit logging: Keep an immutable record (who used which prompt, what code was generated) for audits and incident investigations.

4) Runtime defenses (assume breach)

-

WAF & rate limits: Ensure robust WAF rules and per-endpoint rate limiting to blunt automated exploitation.

-

Least privilege: Enforce least-privilege credentials for app components and DB users; segment networks.

-

Runtime monitoring: EDR/IDS and application logging to detect anomalous behavior and rapid detection/containment. (Faster detection reduces breach cost.)

5) Human-in-the-loop & sign-off

-

Security code reviews: Senior engineers or security champions must approve AI-generated security-sensitive code.

-

Threat modeling: For new endpoints generated by AI, run a lightweight threat model (STRIDE/Cheat sheet) before production deploy.

| Metric | Value (illustrative) | Notes / Source |

|---|---|---|

| AI-generated code insecure rate | ≈45% | Veracode-style findings: many AI completions introduce vulnerabilities |

| Avg cost of a data breach | $4.88M | IBM Cost of a Data Breach (illustrative) |

| Avg time to identify & contain breach | ≈277 days | Industry telemetry (illustrative) |

| Estimated websites hacked per day | ≈30,000 | Industry estimates/telemetry (illustrative) |

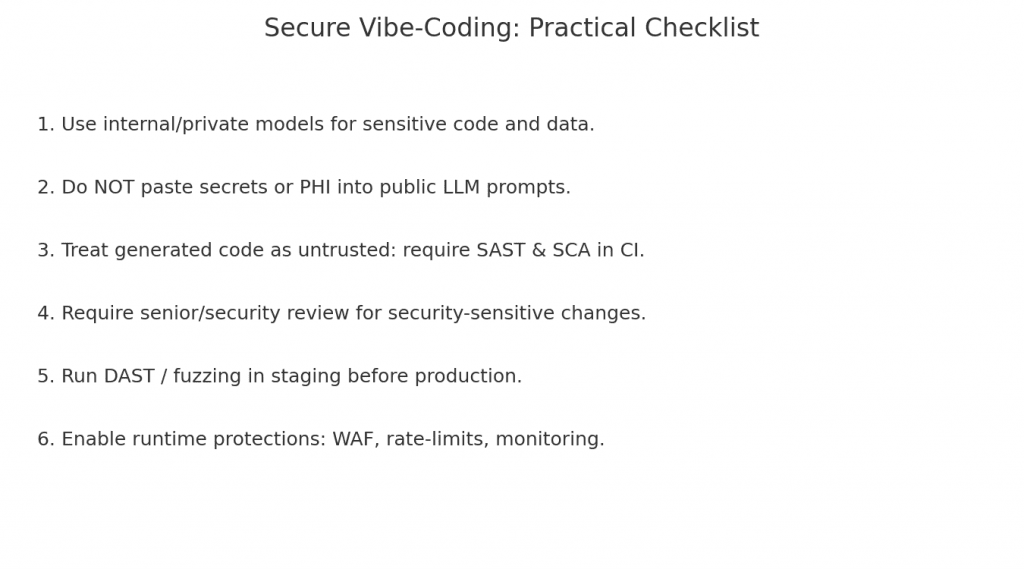

A short security checklist for teams using AI coding assistants

-

Use private models or an internal proxy for sensitive prompts.

-

Never paste secrets/PHI into third-party LLMs.

-

Require SAST + SCA + tests for any AI-assisted PR.

-

Keep an audit trail: prompt, user, generated output, and PR link.

-

Add OWASP Top 10 checks to CI rules.

-

Enforce runtime WAF, rate-limiting, and monitoring.

-

Run a short pilot measuring security false positives/negatives before expanding AI use.

Example: how a safe workflow might look (practical)

-

Developer prototypes with AI using an internal, private LLM.

-

Generated code is saved to a draft PR.

-

CI runs: unit tests, SAST, SCA, and a DAST smoke test on a containerized staging.

-

Security champion reviews and requests changes if SAST flags appear.

-

After approval, merge and deploy to canary with elevated logging for 72 hours.

-

If any runtime anomaly detected, roll back and investigate with audit trail.

Final takeaway: balance speed with assurance

AI tooling (the vibe coding style) delivers major productivity gains — scaffolding, faster prototyping, and boilerplate elimination. But research shows AI-generated code frequently introduces vulnerabilities and web apps are already a major target for attackers. If you adopt AI, adopt it with security-first workflows:

-

Treat generated code as untrusted until vetted.

-

Automate security checks into your CI/CD as non-optional gates.

-

Prefer private/on-prem inference for sensitive systems.

-

Keep humans in the loop for high-risk, security-sensitive flows.

If you do those things, you can keep the upside of AI while dramatically reducing the downside. If you don’t, you’ll be betting your web app’s security on a model that — studies show — produces insecure code roughly 45% of the time and in a landscape where attackers scan and weaponize weaknesses at massive scale.