Vibe coding — relying on LLMs to produce runnable code while minimizing human review — speeds iteration and lowers the barrier to experimentation. But that same shortcut concentrates and multiplies security risk: LLMs replicate insecure patterns at scale, miss system-level assumptions, leak secrets, and create brittle, unobserved attack surfaces. In production settings, adopting vibe coding without strong governance and security engineering creates measurable, addressable hazards.

Key research and reporting underpinning the claims below includes independent studies finding large rates of defects in AI-generated code and industry warnings about unvetted AI code in production.

What is vibe coding?

Vibe coding is the practice of describing your desired behavior to an LLM-based assistant — often in natural language — and accepting the generated code with minimal line-by-line review. The practitioner relies on iterative execution and adjustments (“ask, run, refine”) instead of carefully reading or reasoning about the produced source. The phrase was popularized in 2025 to describe a shift toward conversational, prompt-driven development where human reviewers emphasize results and behavior over source comprehension.

Key characteristics of vibe coding:

-

Natural-language-first interaction rather than writing code character-by-character.

-

Rapid, iterative feedback loops: run the output, observe, repeat.

-

Lower emphasis on inspecting the produced code; more reliance on tests and behavior.

-

Heavy reliance on toolchains that incorporate LLMs as primary code producers (editor assistants, agent-driven code generators, etc.).

This style is powerful for prototypes and single-developer utilities, but the mental model is distinct from traditional engineering practices that prioritize code comprehension, static review, and defensive design.

Why vibe coding caught on

Vibe coding spread quickly for simple reasons:

-

Speed and flow: Developers report dramatic reductions in time-to-prototype for UI tweaks, glue logic, and scripting tasks.

-

Lower barrier for non-experts: People without formal programming experience can generate working tools for personal use.

-

Tooling availability: Editor agents, hosted “make-an-app-from-text” services, and integrated development assistants enable conversational software creation.

-

Creative iteration: Because changes can be described in plain language, product experimentation accelerates.

However — the mechanisms that make vibe coding powerful for speed are the same mechanisms that enable security problems when applied without controls. When developers stop reading code and rely on the AI’s fluency, systemic assumptions and edge-case vulnerabilities slip through.

The security problem statement

Traditional secure development assumes humans author, review, and reason about code — those human steps are where many vulnerabilities are discovered and prevented. Vibe coding reassigns responsibilities:

-

From human reasoning → statistical generation: Models generate code based on patterns in training data; they do not understand threat models or runtime assumptions unless prompted and validated.

-

From explicit review → implicit testing: Code acceptance is often based on successful runs and test scaffolds rather than manual security inspection.

-

From incremental review → scale replication: A single insecure pattern generated by an LLM can be reproduced across many repositories and products quickly.

The net effect: vulnerabilities that a careful human review would have caught instead propagate silently — at scale. Recent large-scale audits confirm this new reality. TechRadar

What the data says (industry research & reporting)

We must anchor the discussion in empirical findings.

-

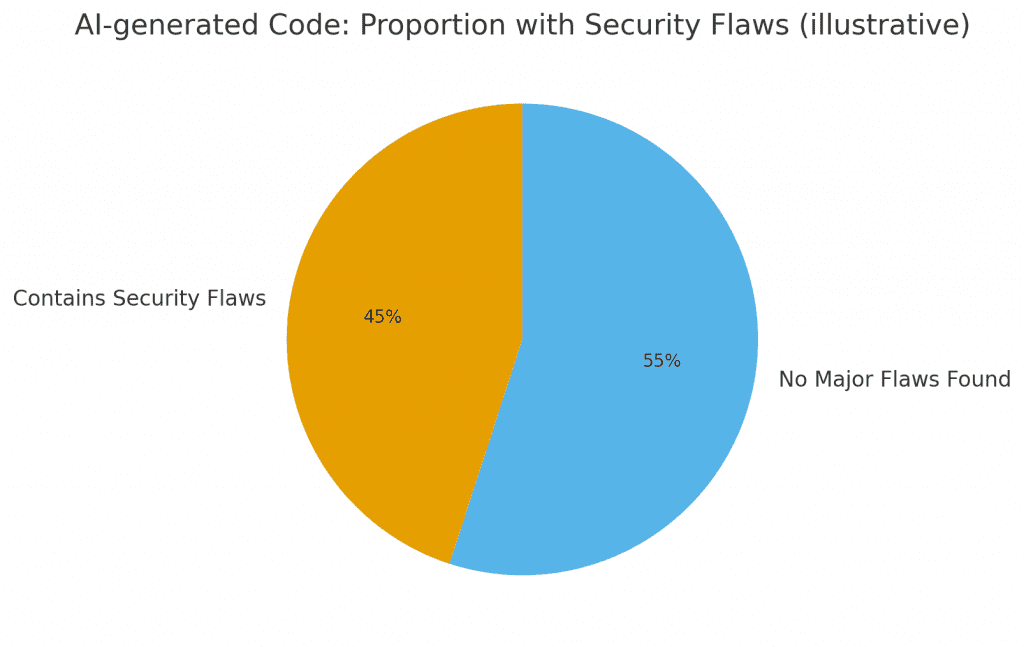

A recent security study analyzed AI-generated code across many popular LLMs and found roughly 45% of generated code snippets contained security-relevant flaws. That is not a marginal increase — it is a systemic risk signal showing that nearly half of AI-produced code can introduce vulnerabilities into a codebase if accepted without mitigation.

-

Industry practitioners and vendors have published posts highlighting common failure modes when AI generates code and how prompting and tooling matter for safe outputs. In-depth engineering blogs show that simple security-aware prompt templates and automated security prompting can reduce but not eliminate insecure pattern generation. Databricks

-

The commercial lifecycle of “vibe coding” platforms also revealed market stresses and user churn as heavy inference usage became expensive and as early adopters encountered reliability and security edge cases. This underscores that the hype cycle has consequences beyond product-market fit — it affects long-term operational risk for companies that depend on generated code. Business Insider

These findings show both a measurement of the problem and early work on ameliorations — but also a gap: evidence shows high flaw rates; solutions exist but are not yet uniformly adopted.

Five concrete threat vectors introduced or magnified by vibe coding

Below are the practical ways vibe coding modifies the attack surface and increases the chance of security incidents.

1. Reproduced insecure coding patterns at scale

LLMs are trained on vast code corpora, which include insecure examples. When prompted without security constraints, models reproduce those patterns — defaulting to simple but insecure practices (e.g., naive string concatenation in SQL, omission of input validation). Because vibe coders may “accept and run” rapidly, these patterns can enter production repeatedly across services.

Why risky: A single insecure pattern, replicated across microservices, multiplies exploitation opportunities.

Mitigation hint: Use security-aware prompts and hard-coded secure templates; add automated checks in CI.

2. Missing system-level assumptions and threat modeling

AI-generated snippets address the immediate functional requirement — they do not automatically know your host environment, user privilege model, or data sensitivity. Without domain-specific constraints, the result might be correct at the unit level but unsafe at the system level (e.g., exposing internal APIs, over-privileging service accounts).

Why risky: Vulnerabilities often involve misaligned assumptions, not just code syntax.

Mitigation hint: Enforce architecture-level checks and threat-model-based test suites.

3. Secret leakage & credential misuse

Vibe coding workflows often encourage copying and pasting example configs, and LLMs can hallucinate or suggest credential-style placeholders. Worse, developers may accidentally paste real secrets into prompts (particularly when experimenting), and those prompts may be logged or stored by vendor services. That can leak secrets to third parties or persist them in model training pipelines unless vendor contracts forbid it.

Why risky: Secret leakage has immediate, high-impact consequences (data breaches, account takeover).

Mitigation hint: Use client-side sanitizers, secret-detection tooling, and dedicated private endpoints with guaranteed prompt privacy.

4. Supply-chain & dependency exposure

Generated scaffolding often comes with dependency manifests (package.json, requirements.txt). LLMs may select convenience packages (unvetted libraries) or outdated versions with known CVEs. Vibe coding encourages rapid composition, but not careful vetting.

Why risky: Unvetted dependencies open a path for supply-chain attacks or transitive vulnerabilities.

Mitigation hint: Integrate SBOMs, dependency scanning, and policy gates into the generation pipeline.

5. Automated attacker acceleration

Malicious actors can use the same vibe-coding approach to speed exploit development: generate vulnerable code patterns, learn how to exploit common misconfigurations, or craft payloads at scale. Research suggests that AI assistance reduces the time to create working exploit code, which raises the stakes on defensive measures.

Why risky: The same tools that increase developer productivity also scale adversary capabilities.

Mitigation hint: Correlate unusual generation patterns with security telemetry and maintain red-team exercises.

Real-world scenarios and illustrative case studies

Below are condensed, anonymized examples showing how vibe coding failures surface.

Scenario A — “Quick plugin, permanent hole”

A product team used an LLM assistant to implement a plugin that ingests user data and writes it to a database. The assistant produced code that concatenated SQL query strings for speed. The team verified the plugin by uploading test data and seeing expected behavior, then released the plugin in production. Weeks later, a security audit revealed the plugin was trivially exploitable via SQL injection that could be mounted by a harvested API key exposed in the plugin manifest.

Root cause: LLM output reproduced a common pattern (string concatenation) and the team accepted behavior-based tests without in-depth review. Static analysis in CI would have caught the vulnerability.

Scenario B — “Secrets in prompts”

An engineer experimenting locally gave the model full database connection strings to help it generate a migration script. Those prompts were logged by the third-party agent service used for the experiment. Later, the vendor’s logs were subpoenaed in a separate legal matter; the secret existence created a compliance incident for the developer’s company.

Root cause: Unsafe prompt hygiene and lack of client-side sanitization.

Scenario C — “Dependency mess”

A startup used vibe coding to scaffold an internal microservice and accepted the generated package manifest. The manifest pinned a convenience library that had been recently reported with a critical CVE. Because the team assumed the scaffold was low-risk and did not run dependency scanning before deployment, the vulnerability entered production.

Root cause: No dependency policy or SBOM generation for AI-generated manifests.

These examples illustrate a common pattern: acceptance of generated outputs based purely on behavior and surface tests, rather than a security-focused lifecycle.

How these vulnerabilities appear in monitoring and incidents

When vibe coding introduces vulnerabilities, ops teams see certain patterns:

-

Higher incident rates in early weeks post-deploy — because generated code often misses edge-case handling.

-

Exploit patterns repeating across services — identical vulnerable code patterns appear in multiple codebases, making detection harder because each occurrence looks benign in isolation.

-

Unexpected telemetry spikes — e.g., increased error logs from unhandled input cases that indicate lack of defensive code.

-

Compliance red flags — discovered secrets in logs or vendor sidecars trigger reviews and possible breach notifications.

Security teams should instrument for these specific fingerprints if vibe coding is used heavily in the organization.

Engineering mitigations: what actually reduces risk

There is no single “silver-bullet” fix — defence requires layered controls. Below are specific, actionable mitigations that balance productivity and security.

A. Security-aware prompt templates

Design prompt templates that require the assistant to follow explicit secure coding constraints. Example prompt instructions:

“Generate a parameterized SQL query using prepared statements. Validate and canonicalize inputs. Include unit tests that exercise injection attempts.”

Enforcing secure instructions lowers the chance of a naive pattern appearing. Research indicates that prompting for security behavior reduces insecure generations when combined with verification. Databricks

B. Automated security gates in CI/CD

Automate static analysis, SAST, dependency scanning, and secret detection on every generated commit. Make the generation-to-PR workflow fail builds for major classes of findings (e.g., unsanitized SQL, direct crypto misuses).

Why it works: Automation catches common classes of errors faster than ad-hoc human review.

C. Provenance & immutable evidence capture

Record the exact prompt, model id/version, and generated output (or a hashed artifact) for every generation step. This supports auditing and root-cause analysis when something goes wrong.

Why it works: You can trace the origin of vulnerabilities and hold processes accountable.

D. Human-in-the-loop approval for risky outputs

Classify outputs by risk (e.g., network-facing code, auth code, payment flows). For high-risk categories, require mandatory human security review before merge.

Why it works: Critical code paths require human judgment that LLMs can’t replicate reliably.

E. Secret-safety tooling & private endpoints

Ensure prompts never include secrets by using local sanitizers and secret-aware clients. When secrets must be used in generation workflows, use isolated, private model endpoints and contractual guarantees from vendors about prompt retention.

Why it works: It prevents accidental exfiltration and reduces compliance risk.

F. Dependency policy & SBOMs

Automatically scan generated dependency manifests and enforce allowed-vendor/allowed-version policies. Generate an SBOM for every generated project and track it in your supply-chain processes.

Why it works: It prevents inadvertent inclusion of vulnerable libraries.

G. Attack-surface reduction by design

Make generated code run with least privilege, apply network segmentation, and require ephemeral credentials for generated deployment artifacts.

Why it works: Even if code has logic flaws, restricting its privileges limits impact.

Organizational controls and governance

A secure vibe-coding program needs organization-level guardrails.

1. Policy: define allowed scopes

Set policy for where vibe coding is allowed: exploratory sandboxes, internal tools, or strictly not allowed in regulated core systems. Configure enforcement via CI policy checks.

2. Training: make prompts safe

Train developers on security-aware prompts and the difference between “accepting behavior” and “accepting code.” Encourage teams to document assumptions in prompts.

3. Tooling investment: bake security into the tooling

Integrate SAST, SBOM, and secret-scanning directly into the agent-to-PR flow so failures are immediate and visible.

4. Metrics: measure what matters

Track the rate of vulnerable findings in generated PRs, the time-to-remediate, and the fraction of generated PRs that required human security review. These KPIs surface regressions early.

5. Procurement & vendor controls

If using third-party hosted agents, contractual terms must guarantee prompt privacy, retention guarantees, and clear SLAs for incident response. Test vendors: do they allow private endpoints, are prompts logged, and do they offer options for enterprise data protection?

Industry reporting has highlighted market churn and vendor constraints, which makes careful procurement and cost modeling important as well. Business Insider

A practical blueprint: how to adopt vibe coding safely

If your team wants to use vibe coding while limiting risk, here’s a concrete program you can deploy in 8 weeks.

Week 0–1: Policy & scoping

-

Define where vibe coding is allowed (sandbox vs production).

-

Create a risk taxonomy and classify code types.

Week 1–2: Tooling setup

-

Integrate your LLM agent to generate PRs into a controlled repo.

-

Configure pre-commit checks for secret detection.

Week 2–4: CI/CD security gates

-

Add SAST, dependency scanning, and unit tests that include adversarial cases (injection payloads, boundary tests).

-

Enforce failure on critical categories.

Week 4–6: Prompt hygiene & templates

-

Build secure prompt templates and small “checklist prompts” that force the model to produce secure code patterns (e.g., “use prepared statements”, “validate inputs”).

Week 6–8: Human review and metrics

-

Require human security review for high-risk PRs; instrument metrics (percent flagged, time to fix).

-

Run a red-team simulation where the adversary uses vibe coding to find exploitable patterns — see how quickly your infra detects them.

This program is iterative: after 8 weeks you should tune thresholds and expand or restrict the scope based on risk KPIs.

Checklist: secure vibe-coding minimums (copy-paste friendly)

Before allowing vibe coding in any environment, require:

-

Prompt privacy controls — prompts sanitized, or private vendor endpoints used.

-

SAST & dependency scanning in CI for generated PRs.

-

Secret detection & prevention on the client-side and CI.

-

SBOM generation for any produced manifests.

-

Mandatory human security review for high-risk code paths.

-

Provenance logging for prompts and model outputs.

-

Access & privilege boundaries for generated deployments.

-

Red-team exercises that use vibe coding techniques to attempt to break your pipelines.

-

Vendor contract clauses guaranteeing prompt and data handling policies.

-

KPIs for flaw rates in generated PRs and remediation SLAs.

Applying this checklist will convert vibe-coding from a risky experiment into a governable productivity vector.

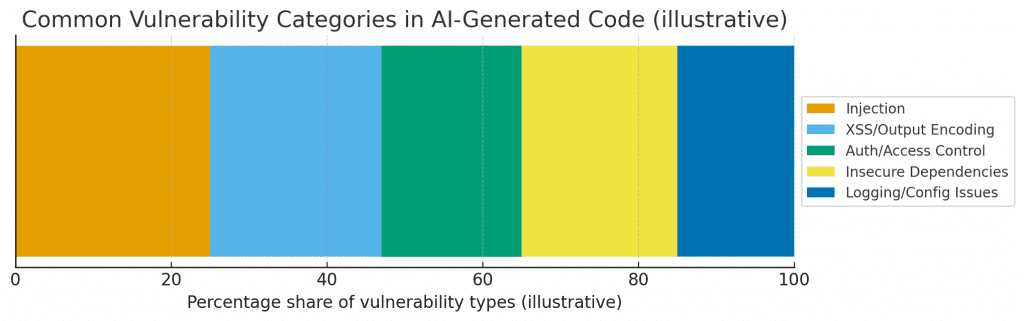

Visualizing the problem (three charts)

Below are three illustrative charts that summarize the problem and surface areas to monitor. They are intended to help communicate risk and prioritize controls.

-

AI-generated Code: Proportion with Security Flaws — Veracode study indicates ~45% of AI-generated snippets contain security flaws. This pie chart visualizes that headline figure. TechRadar

-

Download: Radar/pie → sandbox:/mnt/data/vibe_pie_flaws.png

-

-

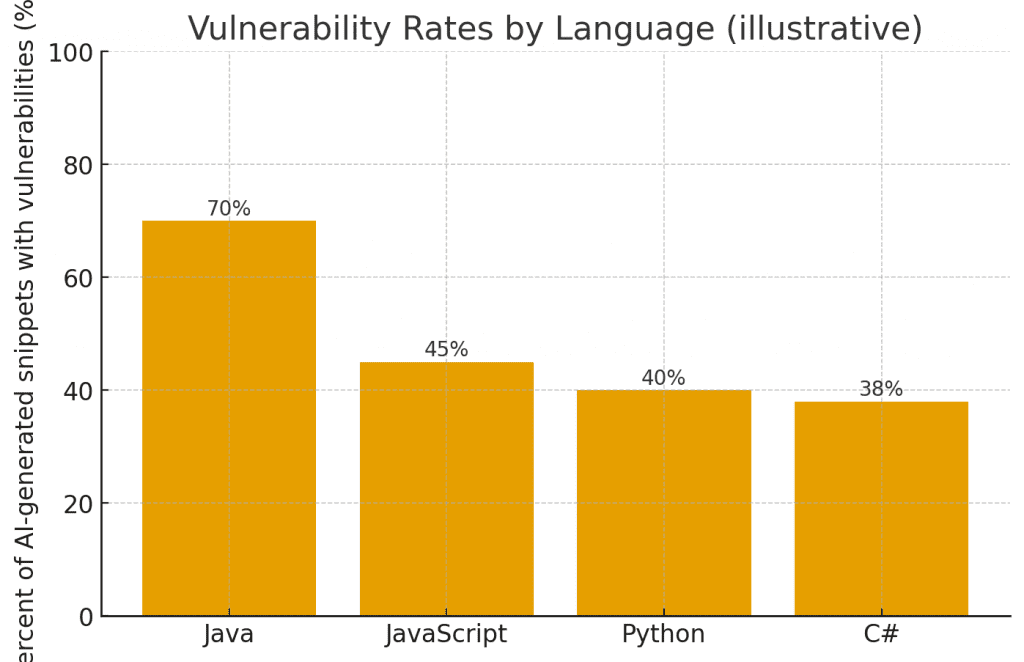

Vulnerability Rates by Language (illustrative) — reported findings show Java is disproportionately affected in some AI-generated samples (roughly ~70% in some samples), while Python, JavaScript and C# show lower but substantial rates. These bars are illustrative and highlight where extra attention for dependency and input handling is warranted.

-

Download: Bar chart → sandbox:/mnt/data/vibe_bar_languages.png

-

-

Common Vulnerability Categories in AI-Generated Code (illustrative distribution) — a synthesized distribution showing the most common classes (injection, XSS, auth issues, insecure dependencies, logging/config). This is an industry synthesis, not a precise breakdown from a single paper; it’s meant to help prioritize testing. Databricks+1

-

Download: Stacked distribution → sandbox:/mnt/data/vibe_stacked_vuln_types.png

-

(If your client doesn’t make these paths clickable, tell me and I’ll package them into a ZIP or PPTX for download.)

The developer experience: how to keep flow without losing safety

One of the reasons vibe coding is attractive is flow. You can preserve flow and still be secure by:

-

Providing inline security nudges in the editor: non-blocking warnings that propose secure alternatives when the agent generates risky code.

-

Making security checks invisible but fast (pre-commit hooks, instant local SAST) so developers get immediate feedback without breaking their iteration cadence.

-

Allowing fast accept for low-risk churn (UI copy, styling tweaks) while gating critical subsystems.

The goal is minimum friction, maximum guardrails.

Practical prompts and patterns that improve outputs

Below are short prompt patterns teams have found useful in making LLM-generated code safer:

-

“Security-first template”: “Generate code that uses parameterized queries, performs input normalization, and includes unit tests demonstrating both normal and malicious inputs.”

-

“Explain then produce”: Ask the model to explain in one paragraph how the produced code defends against common attacks and list assumptions. If the explanation is shallow, require a human review.

-

“Dependency vetting request”: “Suggest only libraries with stable support and no known CVEs in the last 12 months.” (Follow up with automated dependency scanning rather than human trust.)

These patterns reduce risk but do not replace static checks and human judgement.

Limitations of the evidence and thoughtful skepticism

-

Studies show high flaw rates, but the real-world impact depends on how generated code is used. A throwaway script is different from a payment gateway. Use case context matters. TechRadar

-

Prompt engineering helps, but it’s not a substitute for security engineering. In particular, models can be overconfident and produce security claims that sound plausible but are wrong. Databricks

-

Vendor differences matter: some providers offer enterprise privacy guarantees and private-instance options, which materially reduce data-exfiltration risk if properly contracted. Vendor selection must be part of the security design.

Final recommendations: pragmatic, prioritized actions

If your organization wants to benefit from vibe coding while managing risk, start here:

-

Deny production blanket acceptance. No generated code that touches sensitive systems should be accepted without review.

-

Instrument the whole lifecycle. From prompt to deployed artifact: log, scan, and gate.

-

Start with low-risk use cases. Use vibe coding for prototypes, admin UIs, or internal automation with strict limits.

-

Invest in automation early. CI gates, dependency policies, and secret scanning pay for themselves fast.

-

Make approval human + automated. Humans make judgment calls; automation enforces repeatable rules.

-

Measure and iterate. Track flaw rates in generated PRs and reduce them with targeted training, templates, and policy updates.

Adopt these steps incrementally; the investment is light compared to the cost of remediating production security incidents that could have been caught at generation time.

Resources & citations

Key research and reporting cited in this article (selected highlights):

-

A large-scale security study reported that roughly 45% of AI-generated code samples contained security flaws; the report also highlighted language-specific differences with Java showing particularly high flaw incidence in some samples. TechRadar

-

Engineering research and vendor blogs describe practical techniques for prompt-based security improvements and the limits of such approaches.

-

Market reporting and investigative pieces document the rise and rapid evolution of vibe-coding platforms and the operational pressures these platforms face as heavy users stress inference costs and churn dynamics.

-

Authoritative explanations of the term and its practical meaning are available from technical vendor overviews and community synopses.

Closing thought

Vibe coding is a powerful productivity phenomenon: it democratizes code creation and speeds iteration. But it also changes the threat model and removes some of the human safety nets that have guarded software systems for decades. The responsible path is to keep the benefits — rapid iteration and creativity — while rebuilding guardrails at the speed of adoption: instrument prompts, run automated defenses, require human checkpoints for risky outputs, and treat AI-generated code as a first-class input to your security lifecycle rather than a shortcut that avoids it.